Opportunity

In late 2023, the People Directorate approached Web Services about listing job vacancies. Putting jobs back on the website would make it easier for people to find and share that information.

Web Services has a desire to signal-boost the University’s opportunities far and wide. We use a system called Drupal for the website, which we have used to unify and deliver all kinds of content. From Stories to Courses, all integrated into feeds and surfaced in the main search. We were confident that we could do something great with job vacancies.

The People directorate publish jobs to external boards via third party vendor, Broadbean. Jobs are also published on OneUniversity. Web Services determined that we would need to provide our own jobs board. Broadbean could then bridge our systems. This would provide a smooth information flow for the People Directorate team.

The process we decided on takes three simple steps:

- The People directorate posts a job, detailing salary, grade, description, closing date, etc.

- Broadbean receives and display each job in a feed in the data-delivery format “JSON”

- https://www.dundee.ac.uk polls the feed every 15 minutes; creating, updating, archiving

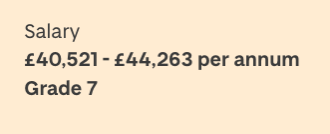

We collaborated with People directorate to determine which fields they required. Standard fields such as closing date, reference, description, salary, grade, and duration. There was also a need for data to show which Unit/Department and School/Directorates each job would be for.

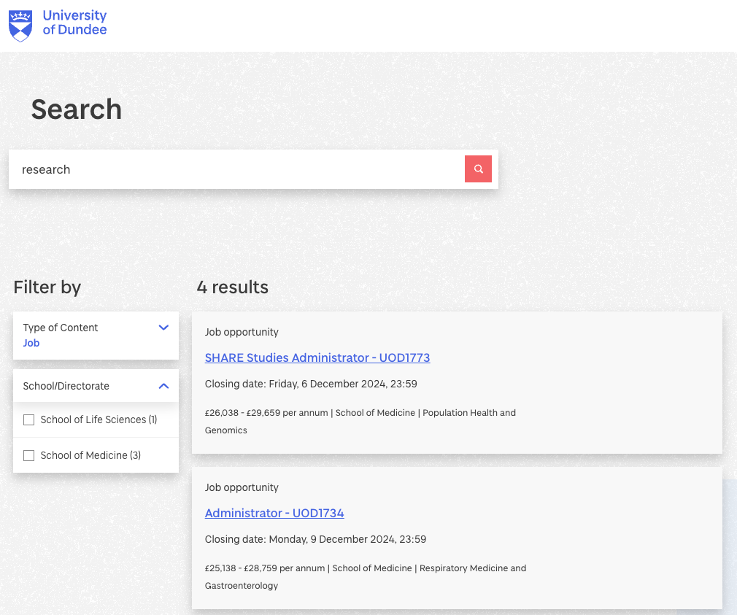

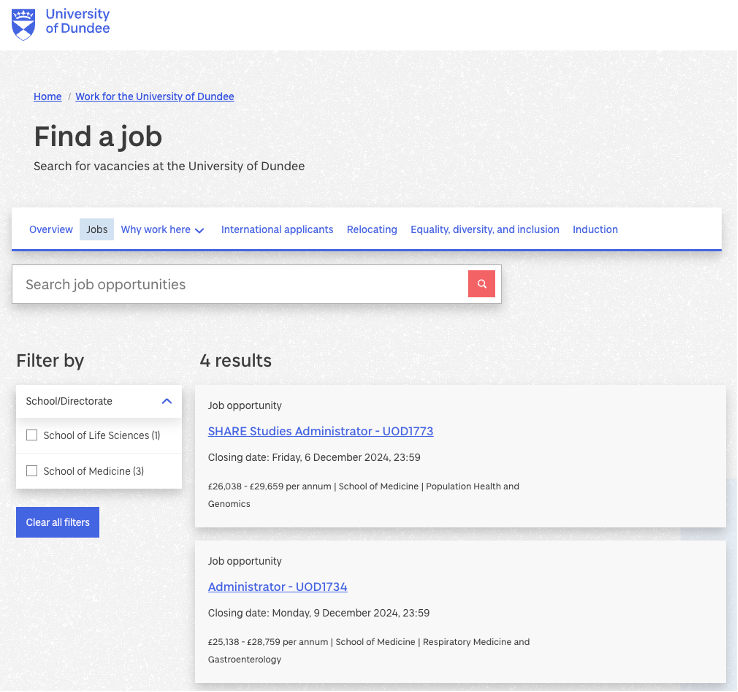

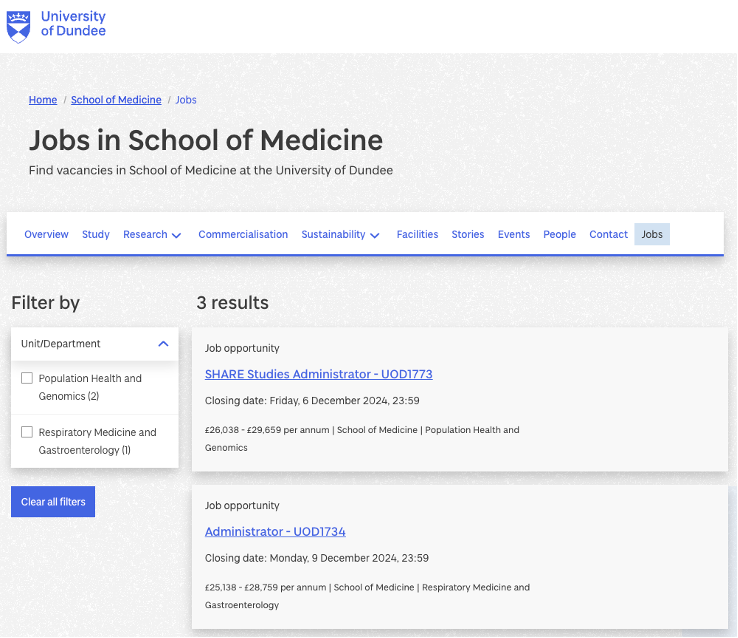

Jobs as a content type surface in three distinct ways:

Website search

Job list page

School/Directorate job listing page

Integration

The aim for Web Services was to allow visitors to discover jobs as part of a natural user journey. We also aimed to maintain a high level of search-engine optimisation. Most of all, our jobs should be accessible and easy to share on social media.

Our first step to achieve this was to par with existing functionality for Stories, Events, and Group pages. We created a new Job content type with most fields being free text fields. We explored using taxonomy terms to reduce data transfer, but we opted for MVP for this iteration. This was to provide flexibility for the People Directorate. There was one area in which we required taxonomy terms, which was School/Directorates.

Our Drupal taxonomies include a “Group” vocabulary. This taxonomy lists terms related to our School/Directorates, and their Unit/Departments. This is how we display Group pages for Stories, Events, and People in a dynamic manner. To deliver minimal data to the University website, we would share the Group term IDs. This would show on the form for the People Directorate as a dropdown list of the Group term names but only send the IDs.

With the fields, content, and taxonomy requirements decided, the integration could begin. In learning to develop with Drupal, I developed experience with the Feeds module. To streamline our job board, I pitched using this module to my team. After a unanimous agreement of its suitability, I took on a lead role to bring project to completion.

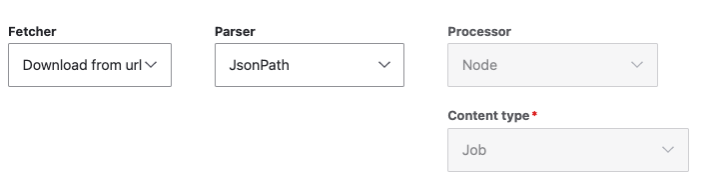

The Feeds module ecosystem is well-supported in the Drupal community. Feeds accepts a variety of basic fetchers and parsers by default. For fetchers, Feeds can read a file stored in a directory, upload a file by a user, or download a file from a URL. Parsers include CSV, HTML, XML, RSS/Atom, and a variety of JSON parsing options.

While I was developing my pitch for Feeds for the jobs project, I considered future use cases for the module. I tested Feeds XLSX Parser, for parsing spreadsheets. I found that it only fetched the first sheet by default, so I began troubleshooting, I found a bug, and I created a patch. This ensured that Feeds could prove useful in future use cases as we continue to improve our website.

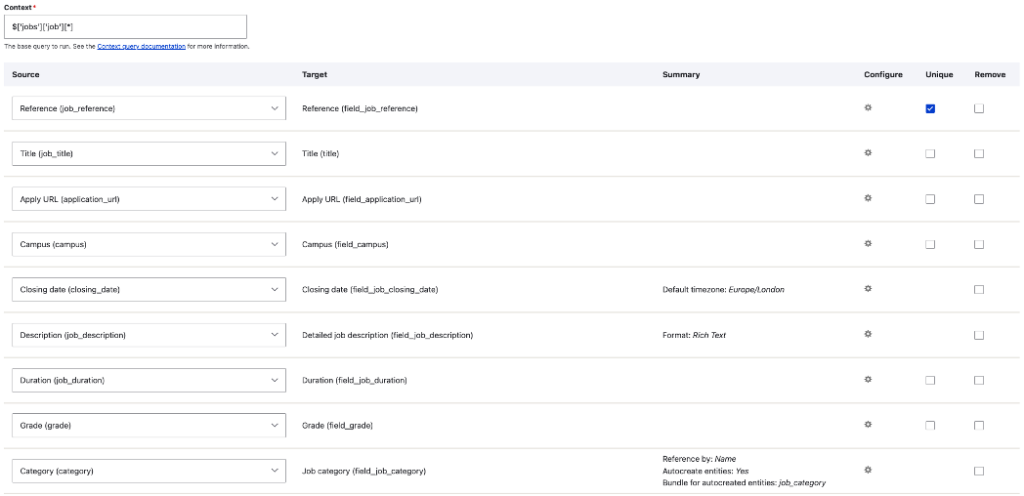

For Jobs, I opted for to download from a batch URL JSON file and parse it with JsonPath for its versatility. The next step would be to map the JSON data provided from the People Directorate form to our Drupal fields. An important element of this process was ensuring that the reference was unique. Important for our audience, but also for Feeds to be able to detect and perform updates and archive jobs.

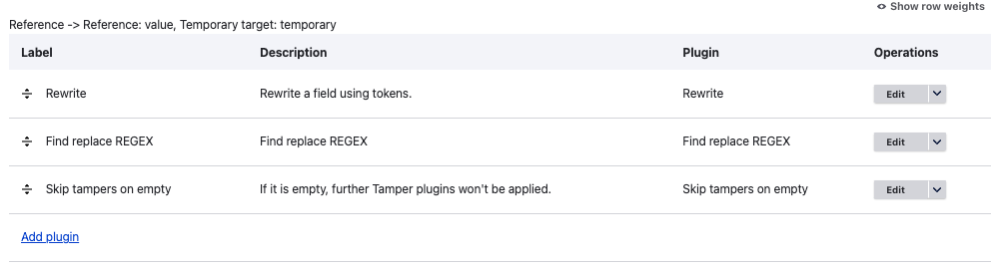

Towards the end of the project, People Directorate informed us of an issue in their form. References were randomly generated and not possible to edit. We sent this information to Broadbean to request a new field. In the meantime, we needed a solution. We already ensured the job reference in our title, so we turned to the Feeds Tamper module.

I began by creating some logic to concatenate the job title and the reference number. This would take the format [job_title] – [job_reference]. This way, if the UOD0000 reference number is in the title or reference fields, we could extract it into the field. If neither, we would fail the import for that specific job.

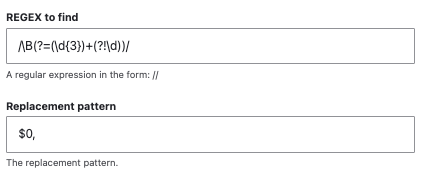

As we were already tampering values to error-check the data we received, I made other changes. We receive salary ranges that could be input as “£10000” or “£10,000”. Our style guides stipulate that we have the comma, so I used a regular expression (RegEx) to force this.

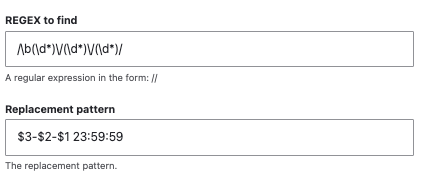

For our closing and publishing dates, we also required a date format that Drupal would accept. So as the JSON field provided was set in a DD/MM/YYYY format for simplicity, a further RegEx tampered it to “YYYY-MM-DD 23:59:59”. Forcing the closing time to display as 23:59 will ensure the time is in line with our style guides to ensure the time is clear to the user as being at the end of the date shown.

Grade was a tricky field. We considered this as being a dropdown of taxonomy terms, but this would not apply to all use cases. Instead, we made this a free-text field, to display the grade beneath the salary.

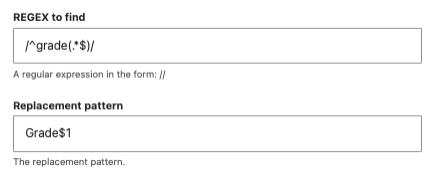

This meant that rather than inputting “Grade 7”, it would be possible to input “7”. A RegEx would first append “Grade” in front of any solitary integers. Then a further RegEx is applied to ensure any occurrences of “grade” were set to a capital-case of “Grade”. If the module detected any other text, it would leave it as-is.

![A detailed breakdown of the regular expression used for the grade field, to force the word “Grade” before the grade number if the word is missing.

“/^((?!:Grade)[0-9]*)$/“ is the RegEx used to first check to see if the word “Grade” does not already exist. It then checks to see if the rest of the content contains just a number. If it contains any other text, it leaves it intact, and otherwise uses “Grade $0” to prepand the word “Grade” before the value.](https://blog.dundee.ac.uk/web/files/2024/12/image-9.png)

The efficiency of Feeds meant that we could now import with every scheduled cron run. Jobs would only import if Feeds detected any changes to the source data. With Feeds Tamper, I could account for handling any error-checking.

The batch feed was incorrectly providing a default closing date of four weeks ahead. This was a separate value from the closing date that removes the job from the batch feed. It was critical the closing date is correct. We could not have potential candidates view a job, believing they have time, only to find it closed early.

To allow us to release early, I volunteered to maintain a temporary feed with manual corrections on a regular basis. This continued until Broadbean could resolve the issues with the job reference and closing date fields at the end of August. Ever since, the People Directorate have had full control over the job content on our website. We successfully released the Jobs project on the 12th of August 2024.

Journey

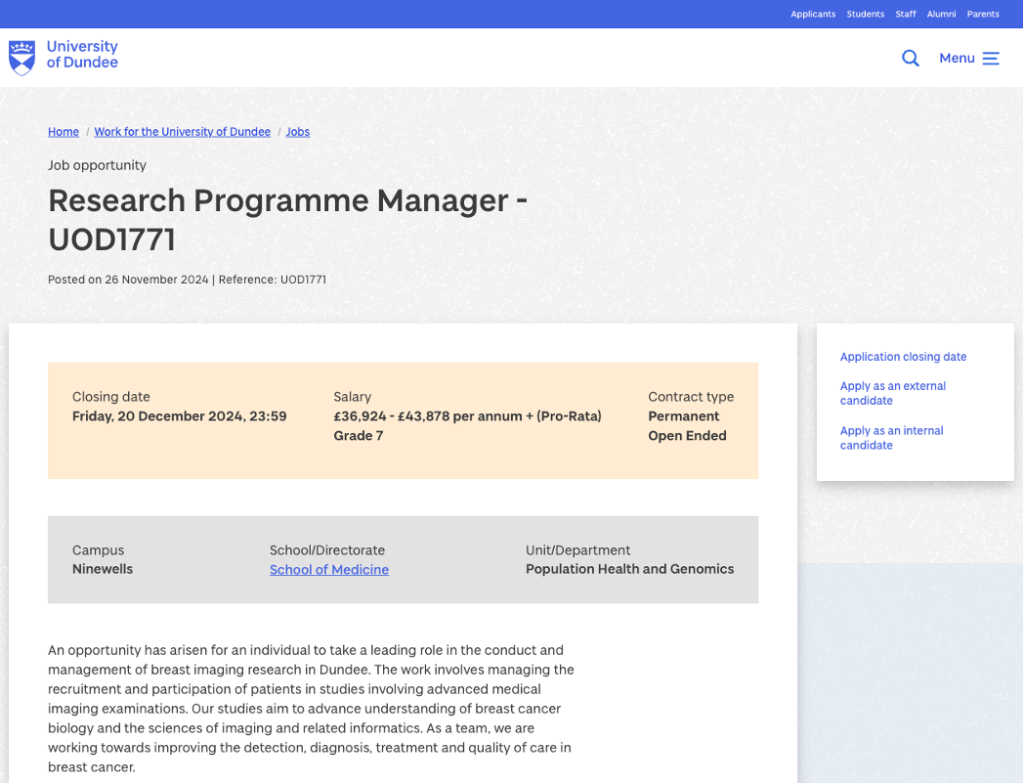

To showcase all these moving parts put together, here is an example of a research position:

There were many lessons learned in the Find a job project. The Feeds module opened avenues to improve our existing import functions. Feeds also gave us ideas on how to raise the efficiency, speed, and weight of our imports. I am excited about the prospect of continuing to improve the University of Dundee’s website processes, particularly with the Drupal Feeds module in mind.